Exactly 1 out of 143 clients in the last 3 years have bothered with email marketing A/B testing. It’s not surprising since many clients don’t even have “absolutely anything to do” with email marketing itself.

I mean, who the heck bothers with the old and boring email marketing when there are really hot social media platforms out there.

If you ignore email marketing, everything else you do with digital marketing is you sweating the small stuff and doing exactly that you shouldn’t be doing.

Why bother with email marketing A/B testing?

Assuming that you are convinced that you should do email marketing (hopefully), let’s look into the “why” of email marketing A/B testing.

- You do A/B testing because you want to find out what works best for your business

- Data-driven marketing is the only kind of marketing you want to depend on.

- When an average email marketing campaign delivers 4300% ROI, it just makes sense to fine tune and optimize one of the most profitable digital marketing channels out there.

- As always, you never know what subject lines, email copy, delivery timing would work the best with your audience.

Now, coming back to Email A/B testing; it works just like it does while you do A/B testing with ads, landing pages, websites, and others.

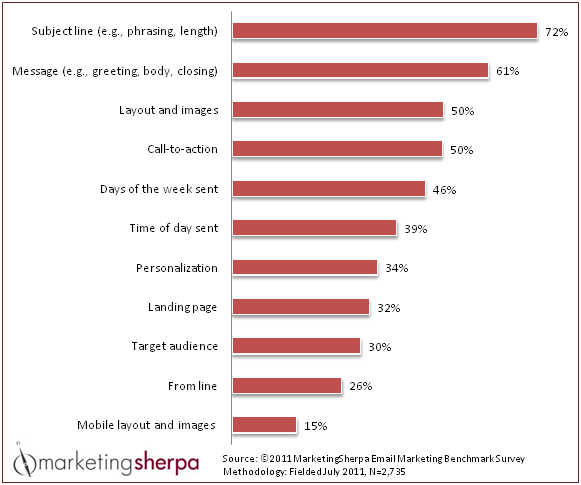

With email marketing A/B testing specifically, you can test:

- Subject lines

- The “from name” or the way sender details are presented

- The content of the email

- A particular segment or a set of recipients

- Time zone that the emails are sent on

- Particular weeks of the day the emails are sent

- Recipient Group size, and more.

According to MarketingSherpa, here’s a full list of what you could do email marketing tests on:

The winning emails are decided on a criterion you can setup. Some of those criteria could be open rate, click rate (total unique clicks), total clicks on a particular link, and more.

Most email marketing service providers like Mailchimp, Drip, and Campaign Monitor give you the tools for you to do A/B testing.

The only reason why you aren’t running email marketing A/B tests are because you weren’t aware, you thought it was fancy, or maybe because you genuinely didn’t get around to do it yet.

With email marketing workflows off a fully-customizable and automated tool like Drip (and also with other ESPs), you can also measure actual ROI of your campaigns with A/B testing.

I know you. It’s not going to be easy to convince you about something as obvious as this. So, here are a few examples of how simple email marketing A/B Testing experiments got these businesses results:

WeddingWire

Weddingwire is a comprehensive website with everything related to wedding. It lists out vendors, has planning tools for weddings, has a list of wedding venues, photographers, Djs, planners, managers, and much more. WeddingWire also provides a ton of insights, inspiration, reviews on individual wedding-related services, and a lot more.

WeddingWire’s newsletter, however, was one those things you could easily do the mistake of “just letting be”. With millions of subscribers, who’d care if there was one social button that doesn’t seem to work as well as it should?

It does matter. When you send out emails to 24 million people or more, everything matters.

While WeddingWire had no problems with people clicking on their main CTA, they were concerned as to why no one was clicking on the regular list of social media buttons.

Of particular concern was to find out why their Pinterest button wasn’t clicked on – from within the newsletter – as much.

The reason why WeddingWire worried about Pinterest was because their users were active there and Pinterest also makes for a great place for information and inspiration on anything to do with weddings.

The regular “social button array” faux pas?

So, when Weddingwire started including pins from their already active Pinterest account, here’s what happened, according to a case study from MarketingSherpa,

“WeddingWire saw a 141% higher Pinterest growth rate compared to the brand’s average, as well as an average lift of 31% on re-pins from email. Top articles reached as high as a 180% lift in re-pins.”

All that traction just for including pins that click through to Pinterest within a newsletter.

You bet.

The Obama Campaigns

Everyone knows how successful email marketing was for Obama’s election as the president and the following re-election too (all this and you still need convincing that email marketing is critical for your business?).

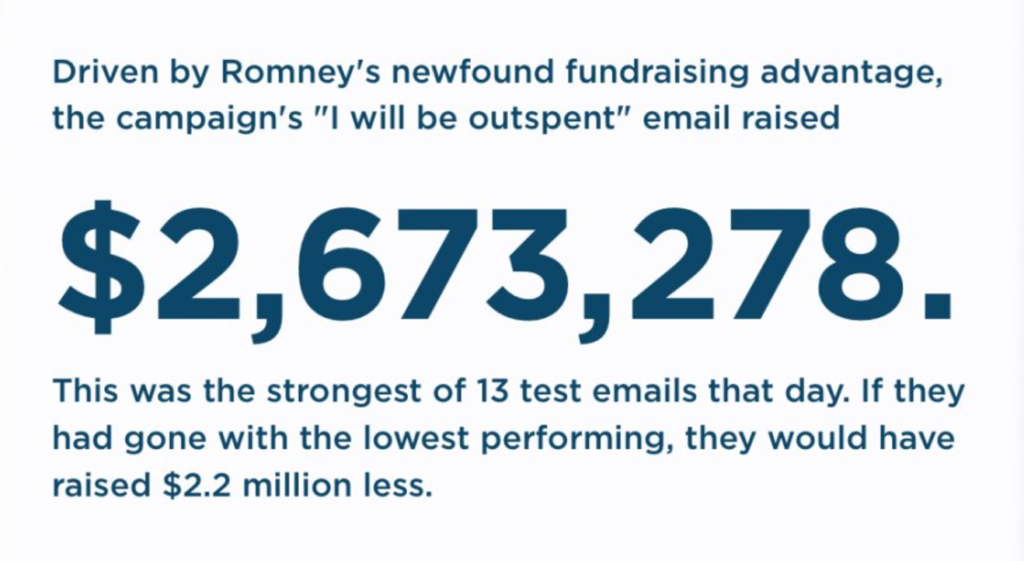

This also includes the famous “I’ll be Outspent” campaign or the one subject line that says “Hey”.

Everyone knows this. Joshua Green of Bloomberg has a fantastic piece on the Science Behind those Obama Campaign Emails.

But then, there was a ton of detail that was being tested behind the exact same email marketing strategy that helped raise more than $500 million in donations.

Thanks to David Moth of Econsultancy and Amelia Showalter – Obama’s Directory of Digital Analytics in 2013.

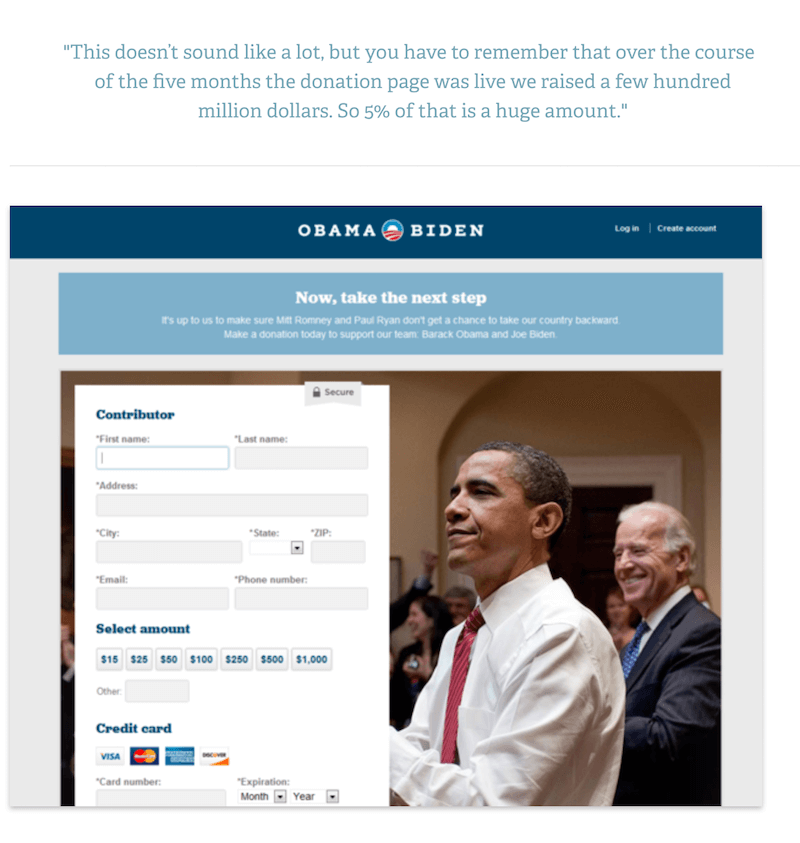

Obama’s team found out that a sequential form that asks for information, one detail at a time, worked better than a single, long-form. This produced a 5% lift in conversions.

As Amelia puts it:

“For example, in one test on subject lines Showalter’s team found that the most effective iteration would raise $2.5m in donations, while the worst performing subject line would bring in less than $500,000.

Similarly, the team achieved a 5% uplift in conversions by A/B testing a long online donation form against a sequential format that asks for a little bit of information at a time, with the latter proving to be more effective.”

There were many more tests on the same campaigns for Obama.

For instance, Obama’s team boosted their donations conversion rate by a whopping 20-30% by a simple change of wording from “Save your Payment details now to make the process quicker next time” to “Now save your Payment Information”

Obama’s team also had a lot of insights that most people (marketers and businesses) wouldn’t get:

- The team fostered a culture of testing

- Ugly designs outperformed pretty looking ones

- The test results were shared internally

- Grabby subject lines proved to be worth millions of dollars.

Who would ever think?

Optimizely

It’s nice to see a company that makes A/B testing as the basis of its existence actually run A/B tests (and email marketing A/B tests, at that). Allison Sparrow of Optimizely ran a total of 82 email A/B tests as on 2017 with only 30% of those tests being significant.

How many calls to action should you have within an email? Any sensible marketer would tell you this: one.

But then, do you know for sure? You won’t know until you test.

In Optimizely’s case, they ran a test for 1 CTA vs Multiple CTAs with their existing customers.

The goal was to simply check in on the clicks for the CTA buttons.

The result was that one focused CTA was more effective (by 33% increase in clicks) than multiple ones.

If you are interested in reading more about Email Marketing A/B Testing, here are a few subject lines A/B test ideas from Sujan Patel on MailShake.

But then, most A/B test results won’t even give you any meaningful results. The folks at MixPanel tell you why A/B tests give bullshit results

“So if you’re sick of bullshit results, and you want to produce that 38% lift in conversions to get that pat on the back and the nice case study, then put in the work. Take the time to construct meaningful A/B tests and you’ll get meaningful results.”

Are you doing A/B testing for your email marketing campaigns?